If you’re using a personal Adobe account, it’s easy to opt out of the content analysis. Open up Adobe’s privacy page, scroll down to the Content analysis for product improvement section, and click the toggle off. If you have a business or school account, you are automatically opted out.

Amazon: AWS

AI services from Amazon Web Services, like Amazon Rekognition or Amazon CodeWhisperer, may use customer data to improve the company’s tools, but it’s possible to opt out of the AI training. This used to be one of the most complicated processes on the list, but it’s been streamlined in recent months. Outlined on this support page from Amazon is the full process for opting out your organization.

Figma

Figma, a popular design software, may use your data for model training. If your account is licensed through an Organization or Enterprise plan, you are automatically opted out. On the other hand, Starter and Professional accounts are opted in by default. This setting can be changed at the team level by opening the settings to the AI tab and switching off the Content training.

Google Gemini

For users of Google’s chatbot, Gemini, conversations may sometimes be selected for human review to improve the AI model. Opting out is simple, though. Open up Gemini in your browser, click on Activity, and select the Turn Off drop-down menu. Here you can just turn off the Gemini Apps Activity, or you can opt out as well as delete your conversation data. While this does mean in most cases that future chats won’t be seen for human review, already selected data is not erased through this process. According to Google’s privacy hub for Gemini, these chats may stick around for three years.

Grammarly

Grammarly updated its policies, so personal accounts can now opt out of AI training. Do this by going to Account, then Settings, and turning the Product Improvement and Training toggle off. Is your account through an enterprise or education license? Then, you are automatically opted out.

Grok AI (X)

Kate O’Flaherty wrote a great piece for WIRED about Grok AI and protecting your privacy on X, the platform where the chatbot operates. It’s another situation where millions of users of a website woke up one day and were automatically opted in to AI training with minimal notice. If you still have an X account, it’s possible to opt out of your data being used to train Grok by going to the Settings and privacy section, then Privacy and safety. Open the Grok tab, then deselect your data sharing option.

HubSpot

HubSpot, a popular marketing and sales software platform, automatically uses data from customers to improve its machine-learning model. Unfortunately, there’s not a button to press to turn off the use of data for AI training. You have to send an email to privacy@hubspot.com with a message requesting that the data associated with your account be opted out.

Users of the career networking website were surprised to learn in September that their data was potentially being used to train AI models. “At the end of the day, people want that edge in their careers, and what our gen-AI services do is help give them that assist,” says Eleanor Crum, a spokesperson for LinkedIn.

You can opt out from new LinkedIn posts being used for AI training by visiting your profile and opening the Settings. Tap on Data Privacy and uncheck the slider labeled Use my data for training content creation AI models.

OpenAI: ChatGPT and Dall-E

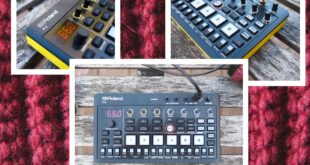

OpenAI via Matt Burgess

People reveal all sorts of personal information while using a chatbot. OpenAI provides some options for what happens to what you say to ChatGPT—including allowing its future AI models not to be trained on the content. “We give users a number of easily accessible ways to control their data, including self-service tools to access, export, and delete personal information through ChatGPT. That includes easily accessible options to opt out from the use of their content to train models,” says Taya Christianson, an OpenAI spokesperson. (The options vary slightly depending on your account type, and data from enterprise customers is not used to train models).

Source link

meganwoolsey Home

meganwoolsey Home